Yeah I'm not really a python guy but I did some research and unless I'm missing something it looks like threading is pretty much busted in Python. Because of something called the Global Interpreter Lock you can essentially only only run one thread of python at a time (per process). So threading is not useful at all for CPU-bound tasks like yours… the only time it would be even remotely useful was where there were multiple tasks that required lots of waiting (I/O-bound tasks).

Your only hope would seem to be using the Process module to spin of individual processes but I'm not sure how you'd share resources between them or how well that would play with TBC and IronPython.

Original Message:

Sent: 05-13-2023 22:44

From: Ronny Schneider

Subject: Multithreading/processing with IronPython and an actual performance increase?

Hello Dylan,

which Python are you using? Definitely not IronPython 2.7, since that doesn't have time.perf_counter.

For something simple like iterating through a for loop without even accessing any variables nor writing results back into an array it may be faster.

There are a few Google results if you search for "ironpython threading too slow"

i.e. https://blog.devgenius.io/why-is-multi-threaded-python-so-slow-f032757f72dc

As you can see in my code, each thread needs to access a triangle/vertice list and write a result back into a list. And each thread is also calling another Python subroutine, checking if the normal ray passes through the triangle, thousands of times.

The write access is protected with the lock. So, each process can lock the results list if it wants to write to it. But that is rarely the case. There are not that many perpendicular results to a DTM where the ray really passes through the triangle. All results where the ray passes outside of the triangle are ignored and don't trigger the list lock. It depends on the mesh, but usually not more than a dozen valid results. I ran it without the list lock and risked some dropped results, without improving the timing. So, the list lock isn't the culprit here.

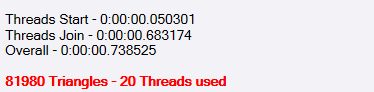

As you can see in the timing example below, the surface has over 80000 triangles. The 10 list locks in this example can be ignored. The point to compute for was always the same, so the result is always the same.

Multithreaded with thread.start/thread.join and different number of threads. The more threads, the slower it becomes. I've also tested it with the macro compiled, similar results.

| thread.start | thread.join | overall |

| 1 | 0.003113 | 0.364594 | 0.372482 |

| 2 | 0.001892 | 0.344879 | 0.350754 |

| 3 | 0.011017 | 0.342697 | 0.356735 |

| 4 | 0.016411 | 0.365555 | 0.385948 |

| 5 | 0.011909 | 0.384430 | 0.401390 |

| 6 | 0.011909 | 0.416061 | 0.430992 |

| 7 | 0.013580 | 0.449432 | 0.469109 |

| 8 | 0.032875 | 0.481445 | 0.519226 |

| 9 | | | |

| 10 | 0.016006 | 0.568153 | 0.591125 |

| 11 | | | |

| 12 | 0.030899 | 0.548080 | 0.581978 |

| 13 | | | |

| 14 | 0.057503 | 0.615089 | 0.676575 |

| 15 | | | |

| 16 | 0.049637 | 0.629097 | 0.682747 |

| 17 | | | |

| 18 | 0.038902 | 0.609062 | 0.652992 |

| 19 | 0.053993 | 0.598091 | 0.657982 |

| 20 | 0.050301 | 0.683174 | 0.738525 |

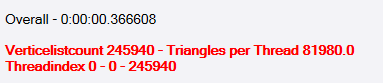

Single threaded, without thread start/join, just calling the function.

------------------------------

Ronny Schneider

Original Message:

Sent: 05-12-2023 00:07

From: Dylan Towler

Subject: Multithreading/processing with IronPython and an actual performance increase?

Hey Ronny,

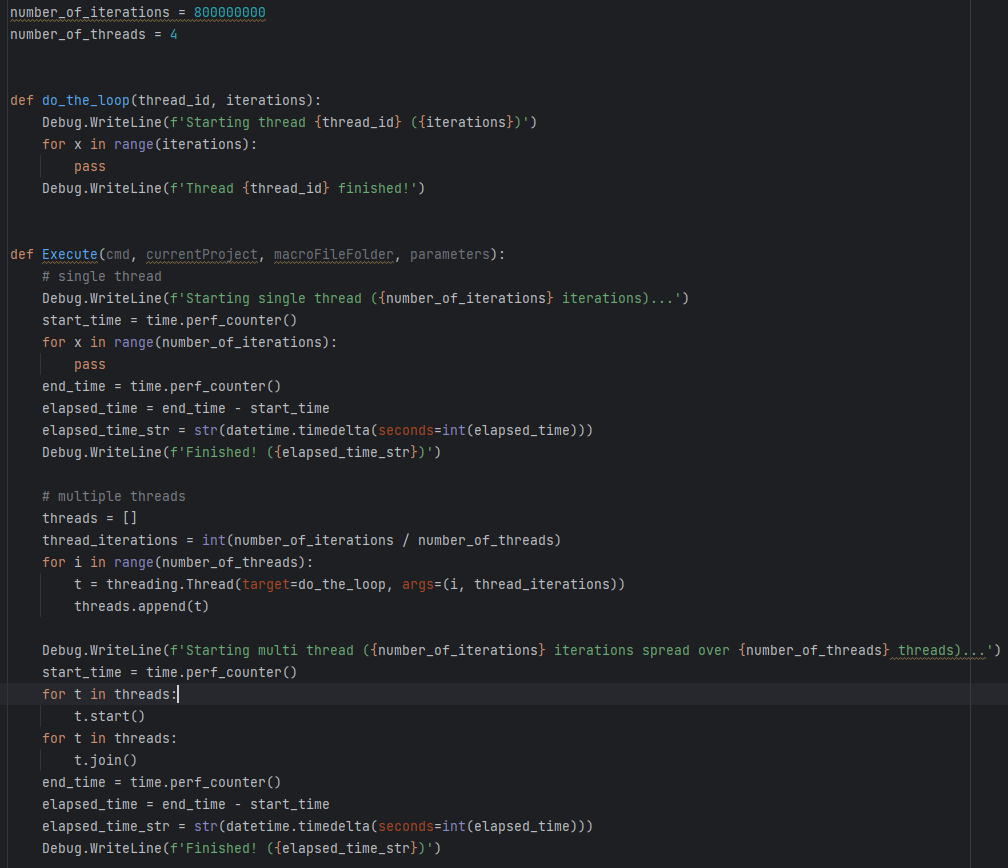

I had a play with this and the python 3 threading seems to be working for me. For these kinds of tests it's always best to remove all complications so I wrote a simple example TML (apologies for screenshot I couldn't get the python to format properly on this forum):

Results:

Starting single thread (800000000 iterations)...

Finished! (0:00:13)

Starting multi thread (800000000 iterations spread over 4 threads)...

Starting thread 0 (200000000)

Starting thread 1 (200000000)

Starting thread 2 (200000000)

Starting thread 3 (200000000)

Thread 1 finished!

The thread 0x4874 has exited with code 0 (0x0).

Thread 2 finished!

The thread 0x5b40 has exited with code 0 (0x0).

Thread 0 finished!

The thread 0x786c has exited with code 0 (0x0).

Thread 3 finished!

The thread 0xd44 has exited with code 0 (0x0).

Finished! (0:00:06)

When you said "Supposedly that is an issue with Python/Ironpython and "from threading import Thread". In Python you should use "from multiprocessing import Process" instead."... where did you get that from?

------------------------------

Dylan Towler

dylan_towler@buildingpoint.com.au

https://tbcanz.com/anz-toolbox/

Original Message:

Sent: 04-11-2023 19:58

From: Ronny Schneider

Subject: Multithreading/processing with IronPython and an actual performance increase?

Has somebody successfully implemented that with an actual performance increase?

I've played around with that over Easter, the code works, I can see all 20 Cores being utilized, but the code runs 50-100% slower than single threaded.

Supposedly that is an issue with Python/Ironpython and "from threading import Thread". In Python you should use "from multiprocessing import Process" instead. But that one doesnt' work in IronPython, doesn't seem to be implemented correctly in 2.7 or 3.4 and throws an error about missing modules.

For starters I tried to pimp my perpendicular to DTM macro. Since you always need to test all triangles for a viable solution, where the normal from the point in question lies inside the triangle, it would massively benefit from multiprocessing. Have each core do the computation for a certain range of the triangles and at the end just output the shortest distance.

from threading import Thread, Lockfrom multiprocessing import cpu_count, Process # use multithreading to check all triangles for a solution threadcount = cpu_count() listlock = Lock() # limit threadcount if there are more than work to do, otherwise we'd end up with double-ups in the result list if threadcount > verticelist.Count / 3: threadcount = int(verticelist.Count / 3) #threadcount = 1 # debug manual thread limiter self.error.Content += '\n' + str(verticelist.Count / 3) + ' Triangles - ' + str(threadcount) + ' Threads used' self.exc_info = None threads = [Thread(target = self.perpdisttotriangle, args = (listlock, computeplumb, vertice1_sel, verticelist, dtmresults, threadcount, threadindex,)) for threadindex in range(threadcount)] # start threads for thread in threads: thread.start() # wait for all threads to terminate for thread in threads: thread.join() if self.exc_info: exc_type, exc_obj, exc_tb = self.exc_info self.error.Content += '\nan Error occurred - Result probably incomplete\n' + str(exc_type) + '\n' + str(exc_obj) + '\nLine ' + str(exc_tb.tb_lineno) def perpdisttotriangle(self, listlock, computeplumb, vertice1_sel, verticelist, dtmresults, threadcount, threadindex): try: trianglesperthread = math.ceil(verticelist.Count / 3.0 / threadcount) # must be 3.0 otherwise it won't be a float and is rounded down before I can round it up istart = int(0 + threadindex * trianglesperthread * 3) iend = int(0 + threadindex * trianglesperthread * 3 + trianglesperthread * 3) if iend > verticelist.Count: iend = verticelist.Count for i in range(istart, iend, 3):............................

------------------------------

Ronny Schneider

------------------------------